Web Scraper allows you to build Site Maps from different types of selectors. It is a Python package for parsing HTML and XML documents and extract data from them. Disclaimer: It is easy to get lost in the urllib universe in Python. Web scraping is an automated, programmatic process through which data can be constantly 'scraped' off webpages.

It makes web … It’s pretty easy to do this.

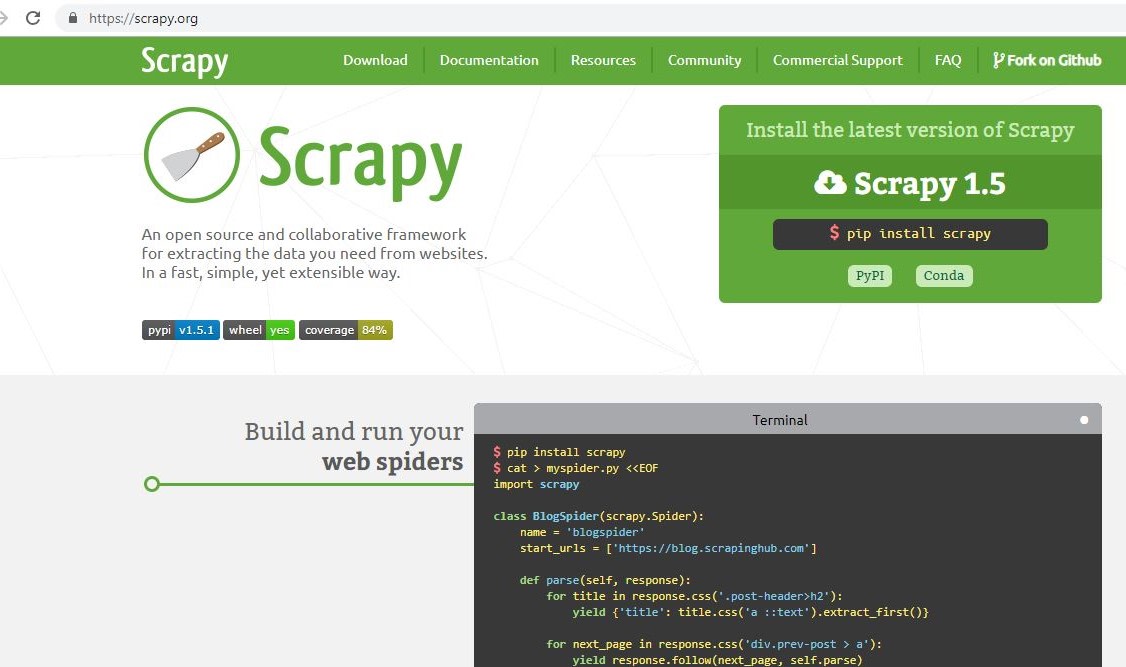

Scrapy is a Python framework for web scraping that provides a complete package for developers without worrying about maintaining code. pip: pip is a python package manager tool which maintains a package repository and installs python libraries, and its dependencies automatically.

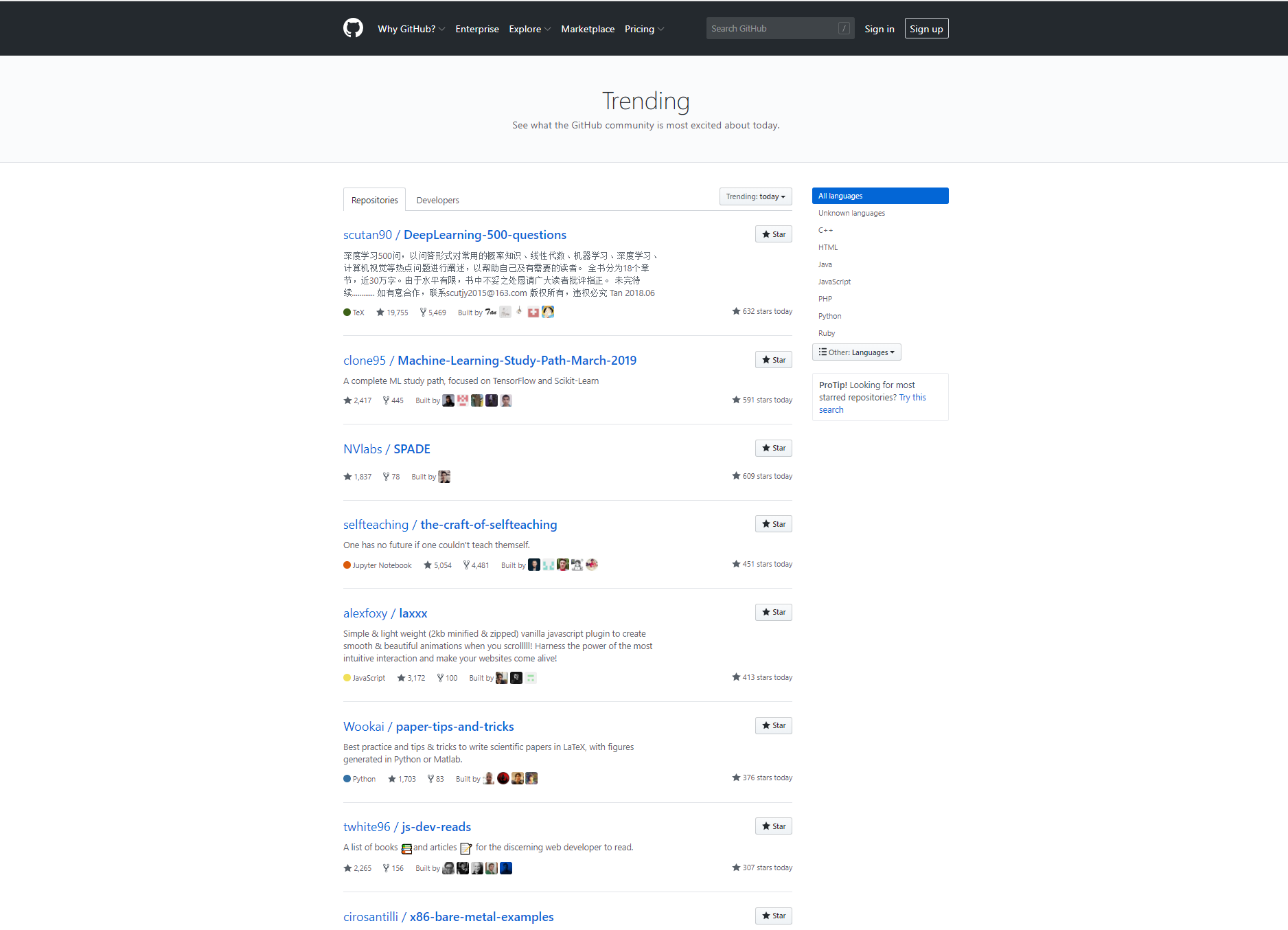

WEBSCRAPER SCRAPY EXAMPLE GITHUB CODE

The code from this tutorial can be found on my Github.

WEBSCRAPER SCRAPY EXAMPLE GITHUB INSTALL

Install scrapy at a location and run in from there. lxml is a Python library that parses HTML/XML and evaluates XPath/CSS selectors. It wasn’t very straight forward as I expected so I’ve decided to write a tutorial for it. Internshala : Python codes for Scraping Internship from Internshala Additional Python Libraries Required : BeautifulSoup pip install beautifulsoup4 Pandas pip install pandas Requests pip install requests Usage : This directory contains 2 python files : Scrapy | A Fast and Powerful Scraping and Web Crawling Framework.

The automated gathering of data from the internet is nearly as old as the internet itself. Python is such a popular programming language today in the coding community. Spider #2: Scraping Post Data I've added a timer to my script so I can know the execution time for the script. The start_requests function will iterate through a list of user_accounts and then send the request to Instagram using the yield scrapy.Request(get_url(url), callback=self.parse) where the response is sent to the parse function in the callback. We’ll be expanding on our scheduled web scraper by integrating it into a Django web app. Build scrapers, scrape sites and export data in CSV format directly from your browser. my assigned task was to scrap several sites using selenium and web scraper plugin so I believe that I can do this work perfectly. Maintained by Zyte (formerly Scrapinghub) and many other contributors. Navigate to the folder and let’s first create a virtual environment. Steps involved in web scraping: Send an HTTP request to the URL of the webpage you want to access. What Is Web Scraping? Example of web scraping using Python and BeautifulSoup. MySQL, MongoDB, Redis, SQLite, Elasticsearch PostgreSQL with SQLAlchemy as database backend. “Inspect element” (Right-click on the title element and select Inspect Element): get the html after executing all the source code of web page, including JavaScript. robot file, using the rpaframework set of libraries. A simple web scraper robot implemented as a Python script ( tasks.py) instead of a. Whacked this together to save some time checking out the daily stats. Then, in the scraper… ruia - Async Python 3.6+ web scraping micro-framework based on asyncio ioweb - Web scraping framework based on gevent and lxml Let’s import the modules we’ll use in this project. For this task, we will use a third-party HTTP library for python-requests. XML Path Language (XPath) and regular expressions are used to define rules for filtering content and web traversal.

0 kommentar(er)

0 kommentar(er)